I didn’t see this coming and I think it’s funny, so I decided to post it here.

I was going to write that every function should be a service as sarcasm, then I realized that’s exactly what this article is proposing. Now I’m not even sure how to make a more ridiculous proposal than this.

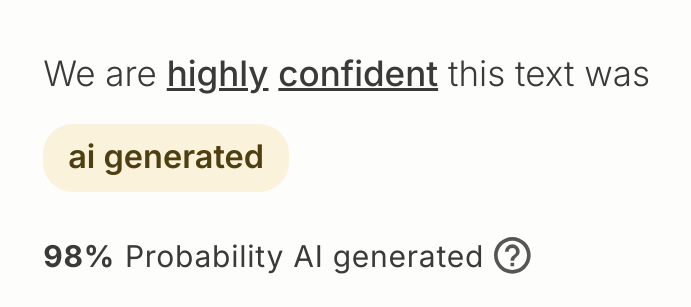

It’s probably AI-supported slop.

Ah, you’re right

Yeah, I had been willing to give the author the benefit of the doubt that this was all part of a big joke, until I saw that the rest of their blog postings are also just like this one.

IaaS Instructions as a Service

Want to know if a value is odd? Boy have we got the API for you!

Boy have we got the API for you!

Why would your whole function be 1 service? That is bad for scalability! Your code is bad and the function will fail 50% of the time half way through anyway. By splitting up the your function in different services, you can scale the first half without having to scale the second half.

Nano services are microservices after your company realizes monoliths are much easier to maintain and relabels their monoliths as microservices.

Unironically. I’d put a significant wager down on that being the source of this term.

That’s exactly what happens at my job.

Announcing FemtoServices™ - One Packet at a Time!

In an era of bloated bandwidth and endless data streams, today we proudly unveil a groundbreaking approach to networking: FemtoServices™ – Connectivity, one Ethernet packet at a time!

(Not to be confused with our premium product, ParticleServices, which just shoot neutrinos around one by one.)

Cant wait to set up a docker container for a service which takes a string input and transforms it into a number as the output. Full logging, its own certificate for encryption of course, 5 page config options and of course documentation. Now, you want to add two numbers together? You got the addition service set up right?

It’s a modern day enterprise fizzbuzz: https://github.com/EnterpriseQualityCoding/FizzBuzzEnterpriseEdition

left-pad as a service.

quantum services

take your source code and put each character in its own docker container

this gives you the absolute peak of scalability and agility as every quantum of your application is decoupled from the others and can be deployed or scaled independently

implementing, operating and debugging this architecture is left as an exercise for the reader

that will be $250,000 kthx

implementing, operating and debugging this architecture is left as an exercise for the reader

Challenge accepted by a reader using AI, what could go wrong? xD

This “article” was written by AI, wasn’t it? This is just throwing vague buzzwords around

I dunno people were doing that long before AI

That intro and general structure (AI loves bulleted lists but then again so do I) sure sound like a lot of the responses I’ve gotten. As always, it’s hard to say for sure.

deleted by creator

gotta keep wirth’s law going strong

Planck services

My services are so small that it is impossible to know just how fast they are running!

I am now offering Planck services for sale, at US$0.0001 per bit.

For an extra fee, you can even choose the value of the bit.

Infinitesimal service. It’s effectively no service but it pays better.

We already have nanoservices, they’re called functions. If you want a function run on another box, that’s called RPC.

I’m trying to understand how this is different than a concept I learned in computer science in the late 80s/early 90s called RPCs (remote procedure calls). My senior project in college used these. Yes I’m old and this was 35 years ago.

Microservice architectures are ad hoc, informally-specified, bug-ridden, slow, implementations of Erlang, implemented by people who think that “actor model” has something to do with Hollywood.

Ok this made me chuckle out loud.

Neovimservices ftw

You know what they say: micro services, macro outages.

This is just distributed functions, right? This has been a thing for years. AWS Lambda, Azure Functions, GCP Cloud Functions, and so on. Not everything that uses these is built on a distributed functions model but a fuck ton of enterprises have been doing this for years.

deleted by creator

Tech moved in cycles. We come back to the same half-baked ideas every so on, imagine we just discovered the idea and then build more and more technologies on top to try to fix the foundational problems with the concept until something else shiny comes along. A lot of tech work is “there was an old lady who swallowed a fly”.

I always keep saying " You cannot plan your way out of a system built on broken fundamentals." Microservices has it’s use case, but not every web app needs to be one. Too many buzzwords floating around in tech, that promise things that cannot be delivered.

Yep micro services are great, but monoliths are just as great and don’t let anyone tell you otherwise. It all depends on what the system requirements are.